Part-III: A gentle introduction to Neural Network Backpropagation

In part-II, we derived the back-propagation formula using a simple neural net architecture using the Sigmoid activation function. In this article, which is a follow on to part-II we expand upon the NN architecture, to add one more hidden layer and derive a generic backpropagation equation that can be applied to deep (multi-layered) neural networks.

This article attempts to explain back-propagation in an easier fashion with a simple NN, where each steps has been expanded in great detail and has explanations. After reading this text readers are encouraged to understand the more complex derivations given in Mitchell's book and elsewhere, to fully grasp the concept of back-propagation. You do need a basic understanding of partial derivatives and one of the best explanations are available in videos by Sal Khan at Khan academy.

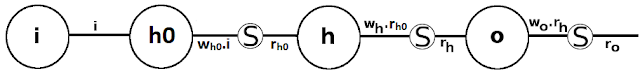

The neural network which has 1 input, 2 hidden and 1 output units(neuron) along with the sigmoid activation function at each hidden and output layer, is given as:

Here, \(r_{h0}=\sigma(w_{h0}.i)\), \(r_h=\sigma(w_h.r_{h0})\) and \(r_o=\sigma(w_o.r_h)\).

If t is given(expected) output in the training data, the Error E is defined as:

$$

\begin{align}

E &= {(t - r_o)^2\over 2}\\

{\partial E\over \partial w_{o}}&={\partial {(t - r_o)^2\over 2}\over \partial w_o}\\

&={1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{o}} \text{ ... eq.(1.1)}

\end{align}

$$

Let's revisit the chain rule:

$$

{dx \over dy}= {dx \over dz}.{dz \over dy}

$$

For a partial derivative form:

$$

{\partial x \over \partial y}={\partial x\over \partial z}.{\partial z\over \partial y}

$$

Applying the chain rule to the right side of eq.(1.1),

$$

\text{Here, } z = {t - r_o},x=z^2, \text{ and } y=w_o \text{ ,therefore, }\\

\begin{align}

{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{o}}&={1 \over 2}.{\partial {x}\over \partial y}\\

&={1 \over 2}.{\partial x\over \partial z}.{\partial z\over \partial y}\\

&={1 \over 2}.{\partial z^2\over \partial z}.{\partial z\over \partial y}\\

&={1 \over 2}.{\partial (t - r_o)^2\over \partial (t - r_o)}.{\partial (t - r_o)\over \partial w_o}\\

&={1 \over 2}.{2(t - r_o)}.{\partial (t - r_o)\over \partial w_{o}} \text{ ... eq.(1.2)}\\

\end{align}

$$

Further,

$$

\begin{align}

{\partial (t - r_o)\over \partial w_o}&={\partial (t - \sigma(w_o.r_h))\over \partial w_o}\\

&={\partial t\over \partial w_o} - {\partial \sigma(w_o.r_h)\over \partial w_o}\\

&=0 - {\partial \sigma(w_o.r_h)\over \partial w_o} \text{ (as,} {\partial C \over \partial x}=0, \text {where C is a constant)}, \text{ ... eq.(1.3)}\\

\end{align}

$$

Let's solve for \({\partial \sigma(w_o.r_h)\over \partial w_o}\),

Applying the chain rule again,

$$

\text{Here, } z = {w_o.r_h},x=\sigma (z), \text{ and } y=w_o \text{ ,therefore, }\\

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_o}&={\partial x \over \partial y}\\

&={\partial x\over \partial z}.{\partial z\over \partial y}\\

&={\partial \sigma(z)\over \partial z}.{\partial z\over \partial y} \\

&={\partial \sigma (w_o.r_h)\over \partial (w_o.r_h)}.{\partial (w_o.r_h)\over \partial w_o} \text{

... eq.(1.4)}\\

\end{align}

$$

The sigmoid function is \({\sigma(x)} = {1 \over {1+e^{-x}}}\), with its derivative being:

$$

{d\sigma(x) \over dx} = {\sigma(x).(1 - \sigma(x))}

$$

Applying the sigmoid differentiation rule to eq.(1.4),

$$

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_o}&={\partial \sigma (w_o.r_h)\over \partial (w_o.r_h)}.{\partial (w_o.r_h)\over \partial w_o}\\

&={\sigma (w_o.r_h).(1- \sigma (w_o.r_h)}).{\partial (w_o.r_h)\over \partial w_o}\\

&={\sigma (w_o.r_h).(1- \sigma (w_o.r_h)}).r_h.{\partial (w_o)\over \partial w_o}\\

&={\sigma (w_o.r_h).(1- \sigma (w_o.r_h)}).{r_h}\\

\end{align}

$$

Therefore in eq.(1.3),

$$

\begin{align}

{\partial (t - r_o)\over \partial w_o}&=0 - {\partial \sigma(w_o.r_h)\over \partial w_o} \\

&=0 - {\sigma (w_o.r_h).(1- \sigma (w_o.r_h)}).{r_h}

\end{align}

$$

And in eq.(1.2),

$$

\begin{align}

{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{o}}&={1 \over 2}.{2(t - r_o)}.{\partial (t - r_o)\over \partial w_{o}}\\

&=(t - r_o).(0 - {\sigma (w_o.r_h).(1 - \sigma (w_o.r_h)}).{r_h})

\end{align}

$$

Using the above derived value of \({1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{o}}\),

$$

\begin{align}

\partial E\over \partial w_{o}&={\partial {(t - r_o)^2\over 2}\over \partial w_o}\\

&={1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{o}}\\

&=(t - r_o).(0 - {\sigma (w_o.r_h).(1 - \sigma (w_o.r_h)}).{r_h})\\

&=-(t - r_o).{\sigma (w_o.r_h).(1 - \sigma (w_o.r_h)})).{r_h}\\

&=-(t - r_o).{r_o.(1 - r_o)}.{r_h} \text { (as,} r_o = \sigma(w_o.r_h) \text{)} \text { ...eq.(1.5)} \\

\end{align}

$$

Let us now find how \(E\) changes in relation to \(w_h\) or the rate of change of \(E\) w.r.t. \(w_h\) the weight of the second hidden layer. This is also called the derivative of \(E\) w.r.t \(w_h\) or \(\partial E\over \partial w_h\).

$$

\begin{align}

{\partial E\over \partial w_{h}}&={\partial {(t - r_o)^2\over 2}\over \partial w_{h}}\\

&={1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h}} \text{ ...eq.(2.1)}\\

\end{align}

$$

Applying the chain rule to the right side of eq.(2.1),

$$

\text{Here, } z = {t - r_o},x=z^2, \text{ and } y=w_h \text{ ,therefore, }\\

\begin{align}

{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h}}&={1 \over 2}.{\partial {x}\over \partial y}\\

&={1 \over 2}.{\partial x\over \partial z}.{\partial z\over \partial y}\\

&={1 \over 2}.{\partial z^2\over \partial z}.{\partial z\over \partial y}\\

&={1 \over 2}.{\partial (t - r_o)^2\over \partial (t - r_o)}.{\partial (t - r_o)\over \partial w_h}\\

&={1 \over 2}.{2(t - r_o)}.{\partial (t - r_o)\over \partial w_{h}} \text{ ... eq.(2.2)}\\

\end{align}

$$

Solve for the right side of eq.(2.2),\({\partial (t - r_o)\over \partial w_h}\),

$$

\begin{align}

{\partial (t - r_o)\over \partial w_h}&={\partial (t - \sigma(w_o.r_h))\over \partial w_h}\\

&={\partial t\over \partial w_h} - {\sigma(w_o.r_h) \over \partial w_h}\\

&=0 - {\sigma(w_o.r_h) \over \partial w_h} \text{(as } t \text{ is a constant) ...eq.(2.3)}\

\end{align}

$$

Applying the chain rule to right side of eq.(2.3), \({\sigma(w_o.r_h) \over \partial w_h}\)

$$

\text{Here, } z = {w_o.r_h},x=\sigma(z), \text{ and } y=w_h \text{ ,therefore, }\\

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_h}&={\partial x \over \partial y}\\

&={\partial x\over \partial z}.{\partial z\over \partial y}\\

&={\partial \sigma(z)\over \partial z}.{\partial z\over \partial y} \\

&={\partial \sigma (w_o.r_h)\over \partial (w_o.r_h)}.{\partial (w_o.r_h)\over \partial w_h} \text{

... eq.(2.4)}\\

\end{align}

$$

We know,

$$

{d\sigma(x) \over dx} = {\sigma(x).(1 - \sigma(x))}

$$

Applying the sigmoid differentiation rule to eq.(2.4),

$$

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_h}&={\partial \sigma (w_o.r_h)\over \partial (w_o.r_h)}.{\partial (w_o.r_h)\over \partial w_h}\\

&={\sigma(w_o.r_h).(1- \sigma (w_o.r_h)}).{\partial (w_o.r_h)\over \partial w_h}\\

&={r_o.(1- r_o}).{\partial (w_o.r_h)\over \partial w_h} \text {(as,} r_o =\sigma(w_o.r_h) \text{)} \text{ ... eq.(2.5)} \\

\end{align}

$$

Solve for right side of eq.(2.5), \({\partial (w_o.r_h)\over \partial w_h}\),

$$

\begin{align}

{\partial (w_o.r_h)\over \partial w_h}&={\partial (w_o.{\sigma(w_h.r_{h0})})\over \partial w_h} (\text {as,} r_h = \sigma(w_h.r_{h0}))\\

&=w_o.{\partial {\sigma(w_h.r_{h0})}\over \partial w_h} \text{ ... eq.(2.6)}\\

\end{align}

$$

Applying the chain rule to right side of eq.(2.6), \({\partial {\sigma(w_h.r_{h0})}\over \partial w_h}\),

$$

\text{Here, } z = {w_h.r_{h0}},x=\sigma(z), \text{ and } y=w_h \text{ ,therefore, }\\

\begin{align}

{\partial {\sigma(w_h.r_{h0})}\over \partial w_h}&={\partial x \over \partial y}\\

&={\partial x\over \partial z}.{\partial z\over \partial y}\\

&={\partial \sigma(z)\over \partial z}.{\partial z\over \partial y} \\

&={\partial \sigma (w_h.r_{h0})\over \partial (w_h.r_{h0})}.{\partial (w_h.r_{h0})\over \partial w_h} \\

&={\sigma(w_h.r_{h0}) (1- \sigma(w_h.r_{h0})}.(r_{h0}.{\partial w_h\over \partial w_h}) \text { (as,} {d\sigma(x) \over dx} = {\sigma(x).(1 - \sigma(x))} \text{)}\\

&={\sigma(w_h.r_{h0}).(1- \sigma(w_h.r_{h0}))}.r_{h0} \text{ ... eq.(2.7)} \\

\end{align}

$$

Hence in eq.(2.6),

$$

\begin{align}

{\partial (w_o.r_h)\over \partial w_h}&=w_o.{\partial {\sigma(w_h.r_{h0})}\over \partial w_h}\\

&=w_o.{\sigma(w_h.r_{h0}).(1-\sigma(w_h.r_{h0}))}.r_{h0} \text { (from, eq.(2.7))} \text { ...eq.(2.8)}

\end{align}

$$

Applying this value in eq.(2.5),

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_h}&={r_o.(1 - r_o}).{\partial (w_o.r_h)\over \partial w_h}\\

&={r_o.(1 - r_o}).w_o.{\sigma(w_h.r_{h0}).(1-\sigma(w_h.r_{h0}))}.r_{h0} \text { (from, eq.(2.8))}\\

\end{align}

Applying this in eq.(2.3),

\begin{align}

{\partial (t - r_o)\over \partial w_h}&=0 - {\sigma(w_o.r_h) \over \partial w_h} \\

&=0 - {r_o.(1 - r_o}).w_o.{\sigma(w_h.r_{h0}).(1-\sigma(w_h.r_{h0}))}.r_{h0}

\end{align}

And in eq.(2.2),

$$

\begin{align}

{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h}}&={1 \over 2}.{2(t - r_o)}.{\partial (t - r_o)\over \partial w_{h}}\\

&={1 \over 2}.{2(t - r_o)}.{0 - {r_o.(1 - r_o}).w_o.{\sigma(w_h.r_{h0}).(1-\sigma(w_h.r_{h0}))}.r_{h0}} \text{ (from, eq.(2.3))}

\end{align}

$$

Using the above derived value of \({1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h}}\),

$$

\begin{align}

{\partial E\over \partial w_{h}}=&{\partial {(t - r_o)^2\over 2}\over \partial w_{h}}\\

=&{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h}} \\

=&{1 \over 2}.{2(t - r_o)}.{(0 - {r_o.(1 - r_o}).w_o.{\sigma(w_h.i).(1-\sigma(w_h.r_{h0}))}.r_{h0})} \\

=&(t - r_o).{(0 - {r_o.(1 - r_o}).w_o.{\sigma(w_h.r_{h0}).(1-\sigma(w_h.r_{h0}))}.r_{h0})}\\

=&-(t - r_o).{r_o.(1 - r_o).w_o.r_h.(1-r_h).r_{h0}} \text { ...eq.(2.9)}\\

&\text{ (as,} r_h=\sigma(w_h.r_{h0}) \text{)}

\end{align}

$$

Let us now find how \(E\) changes in relation to \(w_{h0}\) or the rate of change of \(E\) w.r.t. \(w_{h0}\) the weight of the first hidden layer.

$$

\begin{align}

{\partial E\over \partial w_{h0}}&={\partial {(t - r_o)^2\over 2}\over \partial w_{h0}}\\

&={1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h0}} \text{ ...eq.(3.1)}\\

\end{align}

$$

Applying the chain rule to the right side of eq.(3.1),

$$

\text{Here, } z = {t - r_o},x=z^2, \text{ and } y=w_{h0} \text{ ,therefore, }\\

\begin{align}

{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h0}}&={1 \over 2}.{\partial {x}\over \partial y}\\

&={1 \over 2}.{\partial x\over \partial z}.{\partial z\over \partial y}\\

&={1 \over 2}.{\partial z^2\over \partial z}.{\partial z\over \partial y}\\

&={1 \over 2}.{\partial (t - r_o)^2\over \partial (t - r_o)}.{\partial (t - r_o)\over \partial w_{h0}}\\

&={1 \over 2}.{2(t - r_o)}.{\partial (t - r_o)\over \partial w_{h0}} \text{ ... eq.(3.2)}\\

\end{align}

$$

Solve for the right side of eq.(3.2),\({\partial (t - r_o)\over \partial w_{h0}}\),

$$

\begin{align}

{\partial (t - r_o)\over \partial w_{h0}}&={\partial (t - \sigma(w_o.r_h))\over \partial w_{h0}}\\

&={\partial t\over \partial w_{h0}} - {\partial \sigma(w_o.r_h) \over \partial w_{h0}}\\

&=0 - {\partial \sigma(w_o.r_h) \over \partial w_{h0}} \text{ ...eq.(3.3)}\

\end{align}

$$

Applying the chain rule to right side of eq.(3.3), \({\sigma(w_o.r_h) \over \partial w_{h0}}\)

$$

\text{Here, } z = {w_o.r_h},x=\sigma(z), \text{ and } y=w_{h0} \text{ ,therefore, }\\

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_{h0}}&={\partial x \over \partial y}\\

&={\partial x\over \partial z}.{\partial z\over \partial y}\\

&={\partial \sigma(z)\over \partial z}.{\partial z\over \partial y} \\

&={\partial \sigma (w_o.r_h)\over \partial (w_o.r_h)}.{\partial (w_o.r_h)\over \partial w_{h0}} \text{

... eq.(3.4)}\\

\end{align}

$$

We know,

$$

{d\sigma(x) \over dx} = {\sigma(x).(1 - \sigma(x))}

$$

Applying the sigmoid differentiation rule to eq.(3.4),

$$

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_{h0}}&={\partial \sigma (w_o.r_h)\over \partial (w_o.r_h)}.{\partial (w_o.r_h)\over \partial w_{h0}}\\

&={\sigma(w_o.r_h).(1- \sigma (w_o.r_h)}).{\partial (w_o.r_h)\over \partial w_{h0}}\\

&={r_o.(1- r_o)}.{\partial (w_o.r_h)\over \partial w_{h0}} \text {(as,} r_o =\sigma(w_o.r_h) \text{)} \text{ ... eq.(3.5)} \\

\end{align}

$$

Solve for right side of eq.(3.5), \({\partial (w_o.r_h)\over \partial w_{h0}}\),

$$

\begin{align}

{\partial (w_o.r_h)\over \partial w_{h0}}&={\partial (w_o.{\sigma(w_h.r_{h0})})\over \partial w_{h0}} (\text {as,} r_h = \sigma(w_h.r_{h0}))\\

&=w_o.{\partial {\sigma(w_h.r_{h0})}\over \partial w_{h0}} \text{ ... eq.(3.6)}\\

\end{align}

$$

Applying the chain rule to right side of eq.(3.6), \({\partial {\sigma(w_h.r_{h0})}\over \partial w_{h0}}\),

$$

\text{Here, } z = {w_h.r_{h0}},x=\sigma(z), \text{ and } y=w_{h0} \text{ ,therefore, }\\

\begin{align}

{\partial {\sigma(w_h.r_{h0})}\over \partial w_{h0}}=&{\partial x \over \partial y}\\

=&{\partial x\over \partial z}.{\partial z\over \partial y}\\

=&{\partial \sigma(z)\over \partial z}.{\partial z\over \partial y} \\

=&{\partial \sigma (w_h.r_{h0})\over \partial (w_h.r_{h0})}.{\partial (w_h.r_{h0})\over \partial w_{h0}} \\

=&{\sigma(w_h.r_{h0}).(1 - \sigma(w_h.r_{h0}))}.{\partial (w_h.r_{h0})\over \partial w_{h0}} \text{ ... eq.(3.7)} \\

&\text { (as,} {d(\sigma(x)) \over dx} = {\sigma(x).(1 - \sigma(x))} \text{)}

\end{align}

$$

Let us solve for the right term of eq.(3.7),\({\partial (w_h.r_{h0})\over \partial w_{h0}}\)

$$

\begin{align}

{\partial (w_h.r_{h0})\over \partial w_{h0}}&={\partial (w_h.(\sigma (w_{h0}.i))) \over \partial w_{h0}} \text {(as,} r_{h0}=w_{ho}.i \text {)}\\

&=w_h.{\partial (\sigma (w_{h0}.i))\over \partial w_{h0}} \text{ ... eq.(3.8)}\\

\end{align}

$$

Applying the chain rule to right side of eq.(3.8), \({\partial {\sigma (w_{h0}.i})\over \partial w_{h0}}\),

$$

\text{Here, } z = {w_{h0}.i},x=\sigma(z), \text{ and } y=w_{h0} \text{ ,therefore, }\\

\begin{align}

{\partial {\sigma(w_{h0}.i)}\over \partial w_{h0}}&={\partial x \over \partial y}\\

&={\partial \sigma(z)\over \partial z}.{\partial z\over \partial y} \\

&={\partial \sigma (w_{h0}.i)\over \partial (w_{h0}.i)}.{\partial (w_{h0}.i)\over \partial w_{h0}} \\

&={\sigma (w_{h0}.i).( 1 - \sigma (w_{h0}.i))}.i.{\partial (w_{h0})\over \partial w_{h0}} \text { (applying value of} {\partial {\sigma (x)} \over \partial x} \text {)}\\

&={\sigma (w_{h0}.i).( 1 - \sigma (w_{h0}.i))}.i \text { (as,} {\partial x \over \partial x}=1 \text {) ... eq. (3.9)} \\

\end{align}

$$

Applying value of eq. (3.9) in eq.(3.8),

$$

\begin{align}

{\partial (w_h.r_{h0})\over \partial w_{h0}}&=w_h.{\partial {\sigma (w_{h0}.i})\over \partial w_{h0}}\\

&=w_h.{{\sigma (w_{h0}.i).( 1 - \sigma (w_{h0}.i))}.i} \text { (from, eq.(3.9)) ... eq.(3.10)}

\end{align}

$$

Applying value in eq.(3.10) in eq.(3.7),

$$

\begin{align}

{\partial {\sigma(w_h.r_{h0})}\over \partial w_{h0}}=&{\sigma(w_h.r_{h0}).(1- \sigma(w_h.r_{h0})}.{\partial (w_h.r_{h0})\over \partial w_{h0}}\\

=&{\sigma(w_h.r_{h0}) (1- \sigma(w_h.r_{h0}))}.w_h.{{\sigma (w_{h0}.i).( 1 - \sigma (w_{h0}.i))}.i}\\

&\text {(putting value of} {\partial (w_h.r_{h0})\over \partial w_{h0}} \text{ from eq.(3.10))} \\

=&{r_h.(1 - r_h}.w_h.{{r_{h0}.(1 - r_{h0})}.i)} \text { ... eq.(3.11)}\\

&\text {(substituting values of } \sigma (w_h.r_{h0}) \text { and } \sigma (w_{h0}.i) \text { by } r_{h}, r_{h0} \text{

respectively, for the sake of brevity)} \\ \end{align}

$$

Applying value in eq.(3.11) in eq.(3.6),

$$

\begin{align}

{\partial (w_o.r_h)\over \partial w_{h0}}=&w_o.{\partial {\sigma(w_h.r_{h0})}\over \partial w_{h0}}\\

=&w_o.{r_h.(1 - r_h}.w_h.{{r_{h0}.(1 - r_{h0})}.i)} \text {... eq.(3.12)}\\

&\text {(substituting value of } {\partial {\sigma(w_h.r_{h0})}\over \partial w_{h0}} \text{ from eq.(3.11)}\\

\end{align}

$$

Applying value of eq.(3.12) in eq.(3.5),

$$

\begin{align}

{\partial \sigma(w_o.r_h)\over \partial w_{h0}}=&r_o.(1 - r_o).{\partial (w_o.r_h)\over \partial w_{h0}} \\

=&r_o.(1 - r_o).w_o.r_h.(1 - r_h).w_h.r_{h0}.(1 - r_{h0}).i \text { ... eq.(3.13)}\\

&\text {(from eq. (3.12))}\\

\end{align}

$$

Applying value of eq.(3.13) in eq.(3.3),

$$

\begin{align}

{\partial (t - r_o)\over \partial w_{h0}}=&0 - {\partial \sigma(w_o.r_h) \over \partial w_{h0}}\\

=&0 - (r_o.(1 - r_o).w_o.r_h.(1 - r_h).w_h.r_{h0}.(1 - r_{h0}).i) \text {... eq.(3.14)}\\

&\text {(from eq. (3.13))}\\

\end{align}

$$

Applying value of eq.(3.14) in eq.(3.2),

$$

\begin{align}

{1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h0}}=&{1 \over 2}.{2(t - r_o)}.{\partial (t - r_o)\over \partial w_{h0}}\\

=&{1 \over 2}.{2(t - r_o)}.(0 - (r_o.(1 - r_o).w_o.r_h.(1 - r_h).w_h.r_{h0}.(1 - r_{h0}).i)) &\text {(from eq. (3.14))}\\

=&-{1 \over 2}.{2(t - r_o)}.(r_o.(1 - r_o).w_o.r_h.(1 - r_h).w_h.r_{h0}.(1 - r_{h0}).i)\\

\end{align}

$$

Using the above derived value in of \({1 \over 2}.{\partial {(t - r_o)^2}\over \partial w_{h0}}\),

$$

\begin{align}

{\partial E\over \partial w_{h0}}=&{\partial {(t - r_o)^2\over 2}\over \partial w_{h0}}\\

=&-{1 \over 2}.{2(t - r_o)}.(r_o.(1 - r_o).w_o.r_h.(1 - r_h).w_h.r_{h0}.(1 - r_{h0}).i)\\

=&-(t - r_o).r_o.(1 - r_o).w_o.r_h.(1 - r_h).w_h.r_{h0}.(1 - r_{h0}).i \text { ...eq.(3.15)}\\

\end{align}

$$

General rule:

From eqs. (1.5),(2.9) and (3.15) we see that,

$$

\begin{align}

{\partial E\over \partial w_{o}}=&-(t - r_o).r_o.(1 - r_o).r_h\\

{\partial E\over \partial w_{h}}=&-(t - r_o).r_o.(1 - r_o).r_h.w_o.(1 - r_h).r_{h0}\\

{\partial E\over \partial w_{h0}}=&-(t - r_o).r_o.(1 - r_o).r_h.w_o.(1 - r_h).r_{h0}.w_h.(1 - r_{h0}).i\\

\end{align}

$$

A clear pattern can be seen where the rate of change of E w.r.t. each unit weight is dependent on the rate of change of E of the downstream unit. Hence, we can modify the above equations to:

$$

\begin{align}

{\partial E\over \partial w_{o}}=&-(t - r_o).r_o.(1 - r_o).r_h \\

{\partial E\over \partial w_{h}}=&{\partial E\over \partial w_{o}}.w_o.(1-r_h).r_{h0}\\

{\partial E\over \partial w_{h0}}=&{\partial E\over \partial w_{h}}.w_h.(1 - r_{h0}).i\\

\end{align}

$$

The general form of \({\partial E\over \partial w_{u}}\), is:

$$

\begin{align}

&\text {Output unit,}\\

{\partial E\over \partial w_{u}}=&-(t - r_o).r_o.(1 - r_o).i_{u}\\

&\text {Hidden unit,}\\

{\partial E\over \partial w_{u}}=&{\partial E\over \partial w_{u+1}}.w_{u+1}.(1 - r_{u}).i_{u}\\

&\text {or,}\\

{\partial E\over \partial w_{u}}=&-(t - r_o).r_o.(1 - r_o).w_{u+1}.(1 - r_{u}).i_{u}\\

&\text {where,}\\

&w_{u} \text{: weight of unit } u \\

&w_{u+1} \text{:weight of unit downstream of } u\\

&r_{u} \text{: output of unit } u\\

&i_{u} \text{: input to the unit } u\\

\end{align}

$$

\({\partial E\over \partial w_u}\) determines the rate of change of \(E\) w.r.t. \(w_u\). When the rate of change becomes 0 or close to it, it means we have reached the lowest point in the error space signifying the lowest possible error. The goal is to change the weights in such a way such that we reach this lowest point after multiple iterations.

The rate of change is positive which means if we keep heading in its current direction (by modifying the weights) the rate of change will keep on increasing. To reduce this rate of change, we need to go in the opposite direction. Hence, weight \(w_u\) has to be modified where the sign of change (increase or decrease in value of the weights) is determined by \({-\partial E\over \partial w_u}\). Note the negative sign which allows weights to be correctly modified in such a way that reduces the rate of change.

Based on this idea, the generic weight update rule for a unit \(w_u\) is given as:

$$

\begin{align}

\Delta w_u=&-\eta.{\partial E\over \partial w_u}\\

&\text {for an output unit,}\\

=&-\eta.-(t - r_o).r_o.(1 - r_o).i_{u}\\

=&\eta.(t - r_o).r_o.(1 - r_o).i_{u}\\

&\text {for a hidden unit,}\\

=&-\eta.-(t - r_o).r_o.(1 - r_o).w_{u+1}.(1 - r_{u}).i_{u}\\

=&\eta.(t - r_o).r_o.(1 - r_o).w_{u+1}.(1 - r_{u}).i_{u}\\

=&\text {weight update for all,}\\

\Rightarrow w_u &= w_u + \Delta w_u

&\text {where,}\\

&w_{u} \text{ :weight of unit } u \\

&w_{u+1} \text{ :weight of unit downstream of } u\\

&r_{u} \text{ :output of unit } u\\

&i_{u} \text{ :the input to the unit } u\\

\end{align}

$$

Here \(\eta\) is the learning rate and controls the numeric value by which weights are changed.

The weights can be updated for each training sample (stochastic gradient descent) or after multiple training samples (batch gradient descent) or after exhausting the training data (standard/batch gradient descent).

An important thing to note here is that we do not have to update the weights on actual numeric values of \({\partial E\over \partial w_u}\), since the only information we need is their \(\pm\)sign to determine if weights have to be increased or decreased. However for simplicity, the original back-propagation uses their values directly, with \(\eta\) controlling their impact on weight update.

In later versions of back-propagation (like RProp), weights are not updated on numeric values of \({\partial E\over \partial w_o}\) and \({\partial E\over \partial w_h}\), but their \(\pm\) sign is used.

This concludes the esculent introduction to NN backpropagation. In another article, we will take derive the backpropagation for a convolution neural network (CNN).

Love or hate this article ? Have other ideas ? Please leave a comment below !

Comments

Post a Comment